Shortages hit Akihabara as shops ration storage and memory

Japan’s signature electronics district, Akihabara, is feeling the squeeze from a global rush into artificial intelligence. Multiple computer retailers are capping the number of storage drives and memory kits each customer can buy, citing reduced deliveries and fast shrinking inventories. Some shops limit buyers to only a handful of items per group, with stricter caps for SSDs, HDDs and RAM. In certain stores, a full PC purchase can unlock higher limits, but staff warn that supply remains uncertain.

- Shortages hit Akihabara as shops ration storage and memory

- Why AI is draining consumer PC parts

- Prices climb and older DDR4 dwindles

- CPUs and other parts face ripple effects

- Why scaling supply is hard in semiconductors

- Japan specific context for supply and demand

- What consumers and builders can do now

- The environmental backdrop

- Outlook for 2025

- Key Points

Distributors have paused shipments of specific models and capacities, and retailers say they have not been given a clear timeline for when normal flow will resume. That uncertainty is pushing prices upward, especially for performance parts prized by PC builders and enthusiasts. Store managers describe a moving target, where price lists are revised often and some popular configurations disappear entirely for stretches of time.

The core of the problem sits upstream. Soaring demand for AI infrastructure is pulling memory and storage into data centers at a pace consumer markets cannot match. Memory contract prices have already doubled in some categories. Meanwhile the shift from DDR4 to DDR5 is under way, squeezing availability of DDR4 as manufacturers retool production lines. Some companies have restarted or extended DDR4 production to meet demand for budget systems and upgrades, but volumes are nowhere near large enough to ease current retail pressure.

Why AI is draining consumer PC parts

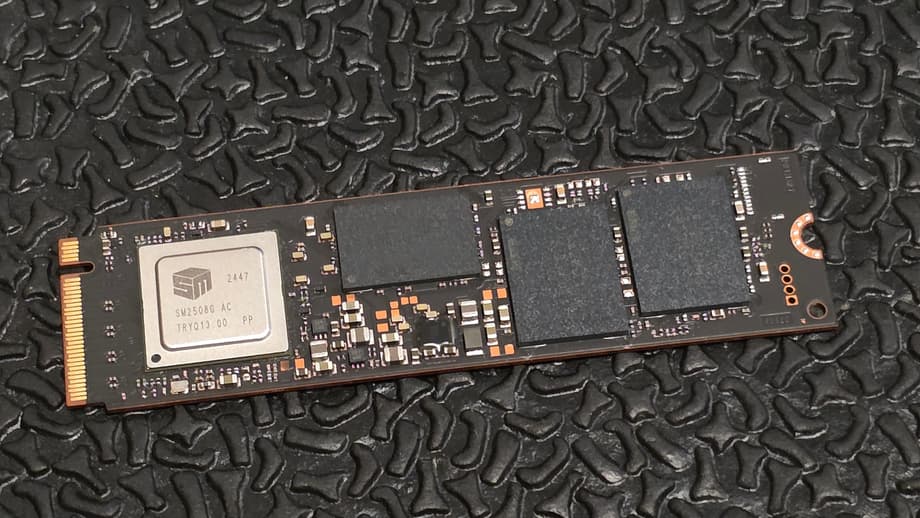

AI training and deployment need vast amounts of compute, and that compute needs memory and storage at scale. Every large language model session sits on stacks of GPUs or custom accelerators. Those chips are paired with advanced memory like HBM for bandwidth, conventional DRAM for capacity, and huge arrays of SSDs and HDDs to stage and stream training data. When cloud providers ramp capex for AI, the pull through affects the entire chain, from wafers and substrates to DRAM, NAND, controllers and even the racks that hold them.

How AI training changes the parts mix

Training a modern model is less about a single chip and more about feeding thousands of processors without a bottleneck. HBM, a type of stacked DRAM very close to the GPU, delivers extreme bandwidth so the chip does not starve for data. At the same time, systems still need conventional DRAM to cache parameters and intermediate results. This dual need drains both premium memory and mainstream DRAM pools. Storage demand climbs as well. Datasets span petabytes, so operators fill racks with SSDs for speed and HDDs for cost per terabyte. When hyperscalers place orders, their size and urgency often win out over retail distribution.

DDR4 versus DDR5

Consumer builders feel the pinch most with DDR4. Memory makers are prioritizing DDR5 for new platforms and HBM for AI accelerators. That shift leaves less capacity for DDR4. Companies that still ship cost sensitive products or support large installed bases are stockpiling DDR4. The result is tight supply at retail and higher prices for people looking to extend the life of older systems.

Some manufacturers have restarted DDR4 lines or extended runs to address demand, but these moves are small relative to the surge from AI and data center builds. In practice, more wafers are going to DDR5 and HBM, and each wafer that moves away from DDR4 keeps shelves thin for consumers.

Prices climb and older DDR4 dwindles

Sticker shock is already visible. Memory prices in consumer channels have climbed fast, and many buyers are seeing the same kit cost twice as much as it did just months ago. Storage is tracking a similar path, especially for popular SSD capacities used in gaming and content creation.

Sticker shock in real numbers

Recent retail snapshots show DDR5 kits that were around 104 dollars for 32 GB now closer to 220 dollars. A two stick pack of 16 GB DDR4 that sold for about 52 dollars in late spring now sits around 115 dollars in several listings. Retail price trackers began logging steady increases in early autumn, then a steeper climb this winter.

Market researchers tie the jump to AI data center construction and a shift of capacity toward HBM and server memory. General purpose DRAM used in PCs is getting less production time and fewer wafers, which tightens supply and pushes prices up. The DRAM market has always moved in cycles, with periods of glut and shortage, but the scale of AI orders and the complexity of expanding advanced packaging make a quick price correction less likely.

Storage buyers face a parallel story. Enterprise and cloud customers are absorbing high capacity SSDs and HDDs for AI training and inference clusters. When those orders spike, consumer focused models can go out of stock or jump in price while manufacturers rebalance allocations.

CPUs and other parts face ripple effects

The crunch is not limited to memory and storage. CPU supply has tightened in select segments, especially parts built on older manufacturing processes that are still in heavy use. Intel has flagged strong demand for server and PC chips made on its Intel 7 and Intel 10 processes, even as the company prioritizes newer designs for future launches. Businesses refreshing fleets for Windows 11, as well as new AI workloads in data centers, are keeping orders high. The supply of substrates that sit under those chips is also tight, which can cap output regardless of wafer availability.

Retailers in Japan report other side effects of the upgrade wave. Optical drives, which had fallen out of favor, are suddenly in demand as users moving to Windows 11 want to access existing disc libraries or burn backups. Internal Blu ray models are hard to find in some shops, and even basic DVD RW drives sell through faster than usual. Many modern PC cases dropped 5.25 inch bays, so external drives are a common workaround, but those have seen spot shortages as well.

Upgrades collide with shortages

Windows 10 support ending has pushed many home users and small offices to consider hardware upgrades sooner than planned. That has driven sales of mid range CPUs, motherboards and RAM, the very tiers that face the most supply pressure. Some Akihabara stores allow higher purchase limits when buyers pick a full build, which helps ensure parts end up in working systems rather than in stockpiles. It also gives shops a way to balance fast moving memory against slower moving items like cases or power supplies.

For now, that approach can smooth daily operations, but it does not solve the upstream limits. If distributors cannot ship, store policies only manage the queue, they do not add supply.

Why scaling supply is hard in semiconductors

Adding meaningful capacity in semiconductors is slow and expensive. High end fabs take years to plan and build, and the tools inside them are some of the most complex machines on the planet. Even if memory makers wanted to flood the market with more DDR4 today, converting lines or expanding space is not quick. Analysis across the industry shows the balance can tip into shortage with a demand increase of about 20 percent. AI demand is running well above that in several links of the chain, especially memory and advanced packaging.

Packaging is a major bottleneck. Techniques that connect chiplets, stack memory and route signals at speed require materials and equipment that are in short supply. Suppliers of substrates and chip on wafer on substrate processes would need to grow capacity at a pace that is hard to achieve quickly. At the same time, data center builders face power constraints. Studies point to a shortfall of 15 to 25 gigawatts of data center capacity in Asia by the end of the decade, which means even funded projects can be slowed by the race for electricity and grid upgrades.

Factories take years

From land selection and permits to tool installation and qualification, a new fab can take three to five years before full output. Substrate plants and advanced packaging lines follow similar multi year timelines. If GPU demand for AI doubles within a short window, the rest of the supply chain must stretch in unison, which rarely happens cleanly.

Chip buyers and suppliers are also adjusting strategy. Many are moving away from just in time inventories to a just in case approach that trades lower carrying costs for resilience. Long term purchase agreements help secure parts and production slots, but they also lock in a larger share of future output for the biggest customers, which can leave less flexibility for retail channels when demand spikes.

Japan specific context for supply and demand

Japan is leaning into AI across healthcare, manufacturing, mobility and finance. The country’s AI market was valued near 12 billion dollars last year and could pass 120 billion dollars by 2032 if current trends hold. That growth draws on Japan’s strengths in robotics and precision electronics. It also increases demand for domestic compute, network gear, memory and storage.

Workforce constraints complicate the picture. A shortage of skilled engineers and technicians in both AI and semiconductors raises costs and can slow expansion. Companies and policymakers are pushing training programs and partnerships. Diversified supply chains, onshoring where practical and friend sourcing are becoming common risk controls.

Aging society pushes automation

Japan’s aging and shrinking population is a powerful driver for automation. Construction, logistics, agriculture and eldercare are adopting AI, robotics and cloud tools to maintain services with fewer workers. That adoption accelerates the need for compute and memory capacity, reinforcing the demand that is already tight.

Industry players are also exploring new memory concepts that could ease power and bandwidth constraints. Reports out of Japan point to a collaboration that aims to develop stacked DRAM with performance in the HBM class while cutting energy use. Even small projects in this area signal how active the search is for solutions that can scale with AI without overwhelming data center power budgets.

What consumers and builders can do now

Shortages at retail do not mean PC building has to stop. It does mean a smarter plan and a willingness to adjust parts lists.

- Buy only what you will use in the next build. Avoid hoarding, since that worsens shortages and ties up budget.

- Consider prebuilt or store assembled systems if they unlock fair purchase limits. Compare the total price to separate parts before deciding.

- Price check DDR5 versus DDR4 platforms. A new CPU and motherboard with DDR5 can sometimes cost less than a large DDR4 upgrade once higher DDR4 prices are included.

- Look at capacity sweet spots. Two sticks of 16 GB or 32 GB kits often track better on price than single large sticks, depending on availability.

- Be flexible on SSD models. If a preferred NVMe drive is scarce, a similar capacity from another brand or a SATA SSD can bridge the gap for storage heavy workloads.

- Reuse parts where possible. Good quality cases, power supplies and coolers carry over and free budget for scarce items.

- Monitor reputable retailers and sign up for stock alerts. Avoid gray market memory with uncertain origins or warranty.

- If you must upgrade an older DDR4 system, avoid overpaying. Smaller increments, like adding 16 GB instead of 32 GB, can keep costs in check until supply improves.

- Back up important data before swapping drives. Component shortages complicate returns and exchanges, so minimize risk during upgrades.

The environmental backdrop

The AI surge has a real footprint beyond store shelves. Data centers already consume hundreds of terawatt hours each year, and AI workloads raise that draw with dense racks of GPUs, memory and storage. Cooling those systems uses large volumes of water, and the electricity mix in many regions still leans on fossil fuels. That means each new wave of AI deployments adds to carbon emissions unless operators add clean power at the same time.

Training a frontier scale model can consume enough electricity to power more than a hundred homes in the United States for a year. The lifetime cost is not just in training. Daily inference queries, which are growing fast, add steady energy demand. Hardware manufacturing also carries a carbon cost, from mining and chemicals to complex fabrication. The push for more efficient memory and packaging is not only about performance, it is also about reducing energy use per unit of compute.

Outlook for 2025

Near term conditions point to continued tight supply of SSDs, HDDs and DRAM for consumers in Japan. AI demand is likely to keep memory and storage allocations pointed toward data centers. Advanced packaging and substrate constraints will take time to ease. DDR4 availability should remain choppy, and buyers who need to extend older systems may face higher prices through much of the year.

Relief will come in steps. New packaging lines, substrate plants and memory capacity are ramping, but the effect will arrive over several quarters, not weeks. Retail rationing in Akihabara is a pragmatic response to keep parts flowing to more buyers. Watch for periodic restocks, bundle deals tied to full builds and price swings as shipments land. Builders who stay flexible on platforms and brands will have the best chance to complete projects without overpaying.

Key Points

- Akihabara shops are limiting SSD, HDD and RAM purchases as distributor shipments slow and inventories shrink.

- AI infrastructure is absorbing memory and storage at scale, pulling supply away from consumer retail.

- Memory prices have roughly doubled in some tiers, with DDR4 hit hardest as production shifts to DDR5 and HBM.

- CPU supply is tighter for parts on older manufacturing processes, driven by data center needs and Windows 11 upgrades.

- Optical drive demand in Japan has spiked as users move to Windows 11 and want access to disc libraries.

- Advanced packaging and substrate supply are key bottlenecks that cannot expand quickly.

- Japan’s AI market is growing fast, and demographic pressures are accelerating automation across industries.

- Reports of new memory research efforts show the search for lower power, high bandwidth solutions.

- Consumers can navigate shortages by avoiding hoarding, staying flexible on parts and considering full builds if purchase caps apply.

- Energy and water use from AI workloads add environmental costs, raising the value of efficient memory and compute.