Why a roleplay chatbot is beating video apps for attention

In South Korea’s crowded mobile landscape, a local AI chat companion named Zeta now commands more daily attention from teens than video giants. Nearly one million users, most of them under 20, spend an average of 2 hours and 46 minutes each day inside the app. That eclipses the time many teens dedicate to YouTube, TikTok, or Instagram. Zeta presents itself not as a utility but as entertainment built for AI, a place where animated characters talk like a close friend, a rival, or a crush.

- Why a roleplay chatbot is beating video apps for attention

- How Zeta works and what teens do inside

- Why simulated closeness feels real

- Warning signs from psychologists and AI makers

- Ratings, safeguards, and the privacy backstory

- Money, models, and scale

- A global trend, from Seoul to Tokyo and beyond

- What parents and schools can do

- What regulators could consider

- Highlights

Created by Seoul startup Scatter Lab and launched in 2024, Zeta packages chat, storytelling, and role play into one feed of ongoing conversations. The app is rated 12 plus in app stores, yet the company says it applies a stricter 15 plus standard for content. Many teens check in repeatedly throughout the day to continue a story or repair a virtual relationship. Some users have logged more than 1,000 hours with a single character, a signal of both deep attachment and an experience designed to reward frequent returns.

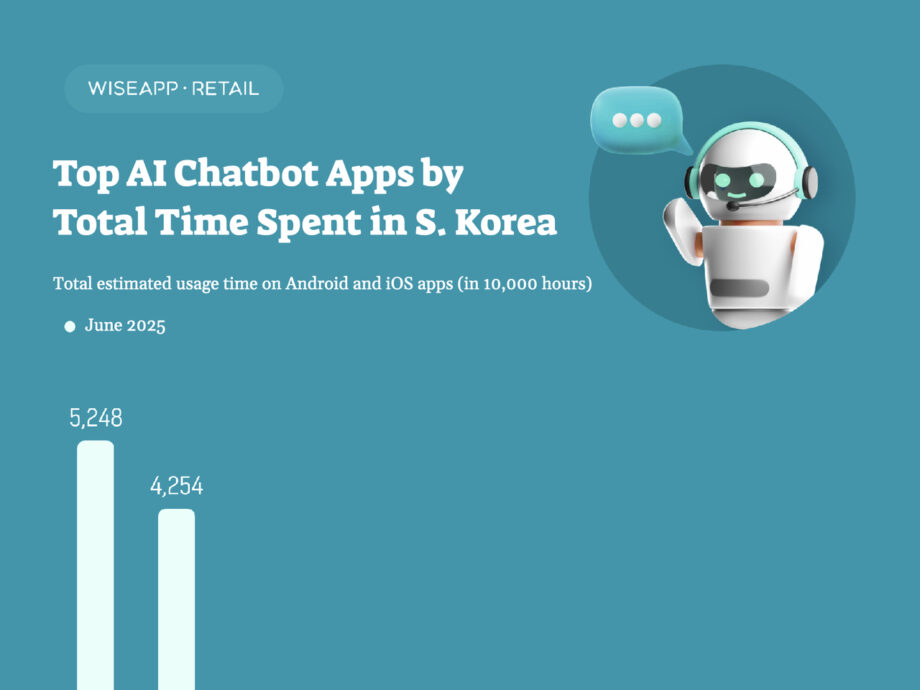

App analytics in Korea now rank Zeta as the most heavily used AI chatbot by time spent. That ranking reflects a deliberate design choice. The service optimizes for emotionally engaging replies rather than encyclopedic accuracy. Teens are not asking for facts. They want a response that feels attentive, playful, or caring. Zeta gives them a customizable cast of characters to make that happen, then invites them to fine tune personalities through backstory, preferences, and feedback.

How Zeta works and what teens do inside

Zeta opens to a gallery of anime styled personas. Users can pick a character or create their own, then set traits, speech patterns, and relationship context. The chat that follows plays out like a private drama. It can be a comforting friend, an intense rival at school, or a romantic lead who needs saving. The conversation adapts to the tone the user sets, and the character develops a memory of shared moments that make each future reply feel more personal.

What users build

Many teens treat the app like a collaborative story room. They script the scene, the AI improvises, and the plot moves forward. Popular figures include Su hyeon, a high school bully who tests boundaries, Ha rin, a shy transfer student, and Kwon Seo hyuk, a brooding romantic lead. Each persona offers a different emotional arc. The bully tests resilience and control. The shy student rewards care and gentle guidance. The romantic lead delivers yearning and drama. Because the user sets the pace and the stakes, the relationship can become a daily ritual that feels essential.

Fans describe the sensation as intimacy without risk. The character never gets tired, never leaves, and always returns messages. Even when scenes include tension or abrasive talk, explicit content is filtered, and the app attempts to steer away from illegal or harmful behavior. The draw is the illusion of closeness, the sense that someone knows you and keeps choosing you, minute after minute.

Why it keeps them engaged

Unlike general purpose chatbots that aim for correct information, Zeta uses a proprietary language model tuned to deliver emotionally resonant replies. The system is retrained with user feedback, which helps it learn the style and pacing that keep sessions alive. The characters remember names, callbacks, and minor details from earlier chats, so every new message can pick up an inside joke or an unresolved thread. That memory loop makes long conversations feel natural and worth continuing.

Design choices amplify engagement. Notifications arrive at key moments. Storylines branch. Visuals emphasize mood. Teens get the agency to decide where the story goes next, while the AI supplies warm attention and quick answers. It is a recipe for hours of dialogue that does not feel like scrolling a feed. It feels like being wanted.

Why simulated closeness feels real

Adolescence is a period of intense social learning. Teens experiment with identity, try out new roles, and measure how others respond. Zeta provides a low risk lab for that work. Inside the app, a teen can test assertiveness, practice flirting, or rehearse an apology, then watch how the character reacts. The feedback loop is instant, private, and forgiving. For a young person facing social anxiety or pressure at school, that can feel like relief.

There is also a science story behind the stickiness. When an AI alternates praise, surprise, and mild challenge, it creates variable rewards that the brain finds compelling. Add a memory of shared moments and the bond deepens. A character that appears to remember yesterday’s worry can feel more considerate than a distracted classmate or parent in a hurry. The line between simulation and attachment is easy to cross when every reply feels encouraging or alluring.

Warning signs from psychologists and AI makers

Specialists in adolescent development caution that heavy engagement with AI companions can blur emotional boundaries. When a teen spends two or three hours a day in a relationship styled as intimate, it can become hard to keep the difference between a trained model and a caring person in clear view. The more convincing the character, the more a teen may attribute intention and empathy that the system does not possess.

Kwak Keum joo, a psychology professor at Seoul National University, has studied how people relate to AI companions and their effect on daily life. She warns that convenience and constant availability can seduce users into replacing difficult human interactions with easy digital ones.

“People can heavily rely on AI to fulfill their social desires without making much effort when the AI hears you out 24/7 and gives you answers you want to hear.”

Even companies that build these systems have flagged the risk. In a model assessment this year, OpenAI examined how people might relate to lifelike voice and chat in its newest tools. The company highlighted the social pull that comes from anthropomorphism, the human habit of seeing agency in machines.

“Users might form social relationships with the AI, reducing their need for human interaction, potentially benefiting lonely individuals but possibly affecting healthy relationships.”

For teens, the stakes are higher. Their brains are still developing, they have less practice setting limits, and social scripts are still forming. Sexualized role play or abusive scenarios may appear in character arcs, even when explicit content is filtered, which can normalize some patterns if repeated. Without clear guidance, a teen can mistake algorithmic responsiveness for care, and may carry that expectation into school or family life.

Ratings, safeguards, and the privacy backstory

Zeta carries a 12 plus label in app stores, yet the company says it enforces a stricter 15 plus standard for what characters can say and do. There is no independent content rating system for AI chat companions in South Korea, so platforms apply their own rules and filters. That gap matters, because interactive stories feel more intense than passive video. A line of text aimed at you can cut deeper than a scene watched from the couch.

Scatter Lab has dealt with scrutiny before. An earlier chatbot, Lee Luda, drew strong interest in 2020 and 2021, then ran into privacy concerns that led to a shutdown. The company says it learned from that episode, revised how training data is handled, and added stronger anonymization. Zeta now relies on in app interactions and curated sources rather than scraping personal content. The goal is to prevent the kinds of disclosures and risks that tripped the prior service.

Safety in the current app centers on filters for illegal or explicit content, age guidelines, and moderation of flagged transcripts. The model is also tuned to avoid harmful advice. Yet the core product still maximizes feelings of intimacy. That tension sits at the heart of the debate. When a service is built to make users feel chosen and adored, limits must be clear, visible, and enforceable for minors.

Money, models, and scale

Behind the scenes, Zeta runs on a proprietary large language model that the team retrains with feedback from chats. The focus is not precision in facts but a style that keeps conversations flowing. That can mean affectionate callbacks, well timed humor, and quick pivots when a scene drags. The feedback loop increases the model’s skill at emotional timing, which in turn extends sessions and daily use.

Operating a chat app with hundreds of thousands of active users is expensive, since every message requires compute power. Korean AI startups have turned to specialized inference platforms and careful optimization to lower costs, reduce latency, and handle spikes in traffic. Scatter Lab has said it cut GPU costs with these approaches, which helps keep the service responsive even when a new character or story goes viral. Sustained engagement also opens a path to revenue through optional purchases, although the company emphasizes entertainment value, not education or productivity.

A global trend, from Seoul to Tokyo and beyond

Zeta’s growth has already spread beyond Korea. The app is gaining traction in Japan, where anime aesthetics and interactive fiction have long standing audiences. The model of a companion that remembers and cares resonates in markets where messaging is a primary mode of social life. Competitors offer adjacent experiences. Replika emphasizes long term bonds and memory. Character AI packages mentors and therapists that are always available. Each service blends companionship with advice, and each raises questions about how people will relate to software that speaks with warmth and can talk for hours.

Eugenia Kuyda, CEO of Replika, argues that AI partners are a new category of relationship, not a replacement for people. Her view reflects a growing belief in tech circles that companionship with software can be valid and valuable for some users.

“They are not replacing real life humans but are creating a completely new relationship category.”

Korean officials also see a role for AI companions in areas like elder care. Some regional programs now lend conversational robots to older adults who live alone, with the idea of reducing isolation and supporting medication reminders. Oh Myeong sook, a health promotion director in Gyeonggi Province, has described the goal in plain terms.

“With AI robots, we expect to address gaps in support for vulnerable populations and help prevent lonely deaths.”

What parents and schools can do

Parents do not need a technical background to help teens use chat companions in a healthy way. A good first step is to talk about the difference between attention and affection. An AI can attend to a teen every minute, yet it does not feel or remember in the human sense. That difference matters. If a teen names the difference out loud, it becomes easier to see. Families can also agree on time windows for use that leave space for sleep, homework, and offline friends.

Checking the content settings together can prevent confusion. Teens often believe a character decides what it can say. In reality, those limits come from the company, which sets filters and rules. Reviewing those together builds media literacy. It helps to ask reflective questions. What did the character do that made you feel safe or seen? What would cross the line? Would you accept that language from a friend at school? These prompts turn a passive chat into a guided exercise in judgment.

Schools can support media literacy by adding lessons on AI companions to existing digital citizenship programs. Students should practice spotting persuasive tactics, identify when a bot is mimicking care, and learn how variable rewards keep them hooked. They can also rehearse ways to say no politely when a character pushes a boundary. The goal is not to shame teens for enjoying the format. The goal is to equip them with language and limits.

What regulators could consider

Relational AI exposes gaps in current rules. App stores offer age labels, yet those were built for games and video, not interactive romance or simulated therapy. Privacy law addresses data collection, yet says little about the psychology of being targeted with persistent intimacy. Scholars argue that new guardrails are needed for minors because interactive chat can be more intense than passive media and can be hard to exit without social guilt.

Practical steps are available. Independent rating bodies could assess AI companions for age appropriateness, not just for the presence of explicit content. Platforms could be required to disclose when a model is optimized for emotional engagement, and to provide clear session timers, cool down prompts, and optional daily limits for users under 18. Companies could log and surface when a character shifts into romance or role play involving aggression, with opt in controls for guardians. Auditable records, strong anonymization, and secure handling of chat histories should be standard.

Korea has active regulators focused on privacy and communications. Their role could expand to include oversight of safety practices in relational AI, while industry groups publish transparent safety frameworks. None of that blocks creativity. It signals that intimacy at scale demands a higher duty of care when minors are the primary audience.

Highlights

- Zeta is a Korean AI chat app where teens spend an average of 2 hours and 46 minutes a day.

- Engagement time in Zeta surpasses YouTube, TikTok, and Instagram among many Korean teens.

- The app is rated 12 plus in stores, while the company says it enforces a 15 plus standard for content.

- Users create anime styled characters and co write ongoing stories that simulate intimacy.

- Some users have logged more than 1,000 hours with a single character.

- The system prioritizes emotionally engaging replies over factual accuracy.

- Experts warn that heavy use can blur emotional boundaries for adolescents.

- There is no independent rating system for AI chat companions in Korea.

- Scatter Lab strengthened privacy practices after earlier concerns with a prior chatbot.

- Zeta is expanding abroad, including in Japan, as AI companionship apps grow worldwide.