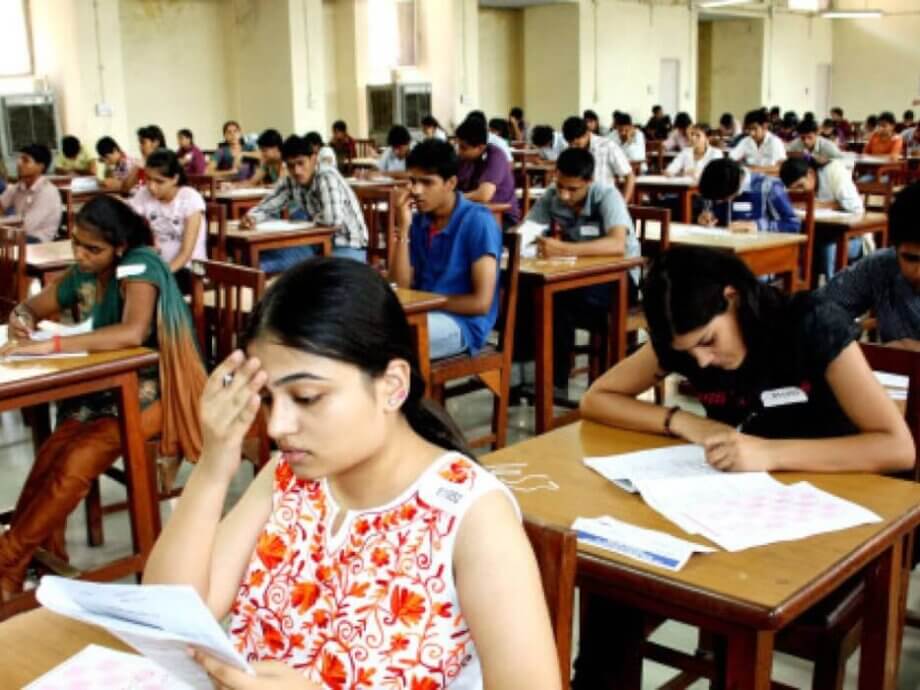

The Rise of AI Misuse in Indian Higher Education

In recent years, Indian colleges and universities have found themselves at the crossroads of technological innovation and academic integrity. The rapid adoption of generative artificial intelligence (AI) tools—such as ChatGPT, Gemini, and Copilot—has transformed how students approach assignments, research, and even basic communication. While these tools offer efficiency and new learning opportunities, they have also sparked widespread concern among educators about the erosion of independent thinking, the authenticity of student work, and the future of traditional pedagogy.

- The Rise of AI Misuse in Indian Higher Education

- Why Are Students Turning to AI?

- Professors’ Concerns: The Erosion of Independent Thinking

- Detection and Discipline: The Cat-and-Mouse Game

- Innovative Countermeasures: Oral Quizzes and Handwritten Exams

- Adapting to the Inevitable: Responsible AI Integration

- Global Context: How Other Universities Are Responding

- The Broader Implications: Rethinking Education in the Age of AI

- In Summary

Across campuses in Bengaluru, Bhopal, and beyond, professors are witnessing a fundamental shift in student behavior. Assignments, essays, emails, and presentations are increasingly being generated or heavily assisted by AI. Instead of taking notes or reading assigned texts, many students now feed course materials into AI systems to produce concise summaries or even complete assignments. This trend, while not unique to India, has prompted a particularly robust response from Indian educators, who are now experimenting with oral quizzes, handwritten exams, and imaginative assignments to counteract the misuse of AI.

Why Are Students Turning to AI?

For many students, the allure of AI is simple: it saves time and effort. Faced with dense, 120-page technical documents or looming deadlines, students like Tejas P.V., a computer engineering graduate, see AI as a practical research assistant. “AI saves time. It helps us research by locating references. For lengthy, boring documents, AI helps to identify the crucial pages for us to focus on,” he explains. Others, like environmental science student Keerthana S., admit that the temptation to use ChatGPT grows as deadlines approach, even if the output sometimes feels impersonal or overly technical.

Some students justify their use of AI by pointing to the realities of the modern workplace, where efficiency is prized and AI-powered tools are becoming standard. “Even after I get a job, my bosses are not going to expect me to waste time on manually doing these basic things,” notes an engineering student who uses AI to complete programming assignments.

Yet, beneath the surface, there are deeper motivations. English professor Greeshma Mohan observes that many students, especially those from non-English-medium backgrounds, turn to AI out of insecurity about their writing skills. “Students use AI because of insecurities that their own writing and ideas are not good enough, and because AI sounds fancier,” she says. This reliance, however, can prevent students from learning through trial and error—a crucial part of intellectual growth.

Professors’ Concerns: The Erosion of Independent Thinking

Educators across disciplines are sounding the alarm about the long-term consequences of unchecked AI use. Dr. Adil Hossain, who teaches history and sociology at Azim Premji University, laments the loss of joy in teaching as he struggles to distinguish between student-generated and AI-generated content. “It’s a shortcut for students. But for teachers, it’s more laborious to separate the AI content from the students’ content. The joy of teaching is gone,” he remarks.

Assistant Professor Ananya Mukherjee, a biology instructor, notes that even when she selects controversial topics to spark genuine debate, students often rely on AI to generate talking points. “Independent thinking, which is the whole point of science, is getting lost,” she warns. In technical fields, the problem is equally acute. Assistant Professor Prem Sagar, who teaches computer applications, observes that while AI can be a helpful tool for debugging code, overreliance undermines the logical reasoning skills that are foundational to programming.

These concerns are not limited to India. Globally, universities are grappling with similar challenges. In the United States, for example, professors are redesigning courses to include more in-class writing, oral exams, and group work, as take-home assignments become increasingly vulnerable to AI-generated plagiarism.

Detection and Discipline: The Cat-and-Mouse Game

To combat AI misuse, Indian universities have implemented a range of disciplinary measures. Most institutions require professors to report suspected cases of AI-generated work, but the enforcement landscape is varied. Some faculties are strict, failing students caught using AI. Others ban electronic devices during exams or require all essays to be handwritten. Some allow limited AI use for grammar correction or research, while others demand multiple rewrites until the work is deemed AI-free.

Detection tools like Turnitin and the Indian-developed DrillBit now include features to flag AI-generated text, but these systems are far from foolproof. In a notable case, law student Kaustubh Shakkarwar sued O.P. Jindal Global University after being failed for alleged AI use, challenging the accuracy of Turnitin’s detection. The university eventually reversed its decision, highlighting the limitations and potential for false positives in current detection technologies.

Professors often rely on their familiarity with students’ writing styles to spot AI-generated work. Telltale signs include the use of em dashes, formulaic phrases like “that being said,” and essays that present a suspiciously balanced array of opinions. Some students attempt to “humanize” AI text using tools like BypassGPT, WriteHuman, or QuillBot, but the best services are often prohibitively expensive for many Indian students.

Innovative Countermeasures: Oral Quizzes and Handwritten Exams

Faced with the limitations of software detection, Indian educators are turning to more traditional and creative assessment methods. Oral quizzes have become a popular tool for verifying whether students understand the content of their written assignments. Professor Pallavi K.V. at AMC Engineering College now routinely quizzes students on their submissions to ensure they have actually read and comprehended the material.

Handwritten exams are making a comeback, much to the chagrin of students who have grown accustomed to typing. These exams are seen as a way to ensure authenticity, as it is far more difficult to use AI tools in a live, supervised setting. Some professors have gone further, designing assignments that are inherently resistant to AI shortcuts. For example, anthropology lecturers may require audio recordings of field interviews, while law professors create simulation exercises based on landmark cases.

Dr. Swathi Shivanand at the Manipal Academy of Higher Education devised an assignment asking students to imagine a dialogue between two historical figures—a task that demands creativity and personal engagement. Similarly, in creative writing classes, assignments set in the local college environment with characters based on real students have proven effective at discouraging AI use.

Adapting to the Inevitable: Responsible AI Integration

Recognizing that AI is here to stay, some educators are shifting their approach from outright prohibition to responsible integration. Assistant Professor Arpitha Jain at St Joseph’s University uses ChatGPT to generate multiple-choice questions from printed readings, challenging students to engage more deeply with the material. While students initially resisted, some later adopted this method to study other subjects more effectively.

Professor Prem Sagar now trains fellow faculty members to use AI not only for lesson planning and presentations but also for providing granular feedback to students. By leveraging data analytics, educators can identify patterns in student performance and tailor their teaching accordingly.

Importantly, some professors are teaching students to critically evaluate AI outputs. Professor Pallavi K.V. highlights the risks of AI-generated misinformation and bias, using real-world examples such as AI-driven resume screening that can perpetuate gender and racial biases. She also warns students about the privacy risks of uploading personal data to viral AI applications.

Global Context: How Other Universities Are Responding

The challenges faced by Indian colleges are mirrored in universities worldwide. In the United States, the release of ChatGPT and similar tools has prompted a wave of course redesigns. Professors are moving away from take-home essays and open-book assignments, instead favoring in-class writing, oral exams, and group projects. Some are crafting assignments that require personal reflection or analysis of current events—tasks that are less amenable to AI automation.

Universities are also updating academic integrity policies to explicitly address generative AI. Detection tools like GPTZero are being adopted, and discussions about the ethical use of AI are being embedded into freshman orientation and required courses. The goal is not only to prevent cheating but to foster a culture of responsible and informed AI use.

Some educators are even incorporating AI into the curriculum, asking students to critique AI-generated responses or compare them to professional work. This approach aims to develop students’ critical thinking and digital literacy, skills that are increasingly essential in an AI-driven world.

The Broader Implications: Rethinking Education in the Age of AI

The rise of AI in education raises profound questions about the nature of learning, assessment, and academic integrity. While AI can democratize access to information and assist students with diverse needs, it also threatens to undermine the development of independent thought, creativity, and authentic communication.

There are also practical concerns. AI tools consume significant amounts of energy—interactions with systems like ChatGPT can use up to ten times more electricity than a standard web search. Moreover, the proliferation of AI-generated content complicates the task of evaluating student work and maintaining fair assessment standards.

At the same time, the debate over AI in education is part of a larger conversation about the role of technology in society. As AI systems become more sophisticated and autonomous, questions about bias, privacy, and accountability become ever more pressing. Educators, policymakers, and technologists must work together to develop guidelines and frameworks that balance innovation with ethical responsibility.

In Summary

- Indian colleges are experiencing a surge in AI misuse, with students using generative tools for assignments, essays, and even basic communication.

- Professors are concerned about the loss of independent thinking, the authenticity of student work, and the erosion of traditional learning processes.

- Detection tools like Turnitin and DrillBit are used to flag AI-generated content, but they are not foolproof and can produce false positives.

- Educators are responding with oral quizzes, handwritten exams, and creative assignments designed to resist AI shortcuts and encourage personal engagement.

- Some professors are integrating AI responsibly into their teaching, using it for question generation, feedback, and digital literacy education.

- The challenges faced in India reflect a global trend, with universities worldwide rethinking assessment methods and academic integrity policies in response to AI.

- The broader implications of AI in education include concerns about energy consumption, bias, privacy, and the future of authentic learning.

- Ultimately, the goal is to strike a balance between leveraging AI’s benefits and preserving the core values of education: critical thinking, creativity, and integrity.